Partial derivative

A partial derivative is the derivative with respect to one variable of a multi-variable function. For example, consider the function f(x, y) = sin(xy). When analyzing the effect of one of the variables of a multivariable function, it is often useful to mentally fix the other variables by treating them as constants. If we want to measure the relative change of f with respect to x at a point (x, y), we can take the derivative only with respect to x while treating y as a constant to get:

This new function which we have denoted as is called the partial derivative of f with respect to x. Similarly, if we fix x and vary y we get the partial derivative of f with respect to y:

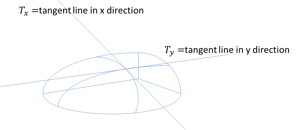

Note: When denoting partial derivatives, fx is sometimes used instead of . Geometrically,

and

represent the slopes of the tangent lines of the graph of f at point (x, y) in the direction of the x and y axis respectively.

Formal definition

The formal definition of the partial derivative of the n-variable function f(x1 ... xn) with respect to xi is:

Note: the phrase "ith partial derivative" means .

The partial derivatives of f(x1...xn) such as are themselves functions of x1,..., xn. Therefore we can just as easily take partial derivatives of partial derivatives and so on. For example, the x-partial derivative of

, denoted

, is -y2sin(xy). Similarly:

| = | |||

| = | |||

| = | |||

| = |

Notice that . It turns out that this process of exchanging the order of variables with respect to which we take partial derivatives yields the same answer for any function. This fact is known as the equality of mixed partials.

Theorem: equality of mixed partials

Note: When writing higher order partial derivatives, we normally use and

in place of

and

respectively.

Directional derivative

The partial derivatives represent how the function f(x1, ..., xn) changes in the direction of each coordinate axis. But how do we measure the relative change in f along an arbitrary direction that doesn't align with any coordinate axes? Let's say you are starting at a point p = (p1, ..., pn) and you want to find the relative change or "slope" in f caused by an infinitesimal change along a unit vector

. Then the directional derivative of Duf of f in direction u at p is given by:

where the p subscript means that we are taking partial derivatives at p. To understand why this measures the relative change along unit vector u, start with a function of a single variable. In that case, there is only one direction and so the directional derivative in that direction is

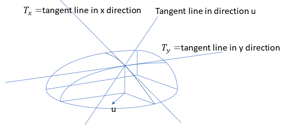

as we expect. In two variables, recall the image

and notice that the tangent lines make a plane that is also tangent to the curve at point p = (x0, y0). The equation of the plane is:

| = | |||

| = |

where Δx = x - x0 and Δy = y - y0 represent the change in x and y away from (x0, y0). When (x, y) = (x0, y0), the z-value should just be f(x0, y0). For every unit step in the positive x direction, the z-value should increase by units. Similarly, the z value should increase by

units for every unit step in the positive y direction. If we define the change in z as Δz = z - f(x0, y0), then the change in the direction of vector u = [Δx, Δy]T is

. We can generalize this to a change in f(x1,..., xn) in the direction u = [Δu1,...,Δun]T to get

. If we require u to be a unit vector, then this expression is our original definition of a directional derivative.

Example

Find the directional derivative of at p = (4, 2) in direction

.

First, compute the partial derivatives,

Then plug in the point p to get:

Then, the dot product is:

Gradient

At a point p, the gradient, ∇fp, of f(x1, ..., xn) is defined as the vector:

We can express the directional derivative at p in the direction of unit vector u as the dot product,

One property of the dot product is that , where ||v|| denotes the magnitude or Euclidean norm,

, and θ is the angle between v and w when both their tails are at the same point. Therefore,

but ||u|| = 1, since u is a unit vector, so

Example

Find the directional derivative for the previous example using the above formula.

The norm of the gradient is:

The cosine of the angle is:

Thus,

which matches our answer from the previous example.

The directional derivative is maximized when cos(θ) = 1 or θ = 0 and minimized when cos(θ) = -1 or θ = π. When θ = 0, u points in the same direction as ∇fp, and when θ = π, u points in the opposite direction as ∇fp. In summary, the directional derivative is maximized when u points in the same direction as ∇fp and minimized when u points in the opposite direction of ∇fp. Remembering that the directional derivative measures relative change, we have proven the following theorem: The gradient always points in the direction of steepest increase.

Multivariable chain rule

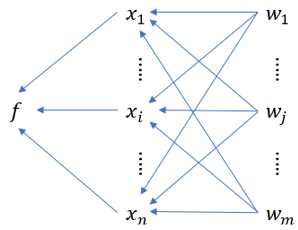

Suppose that each of the n variables of f(x1,..., xn) is also a function of m other variables, w1,..., wm, so each xi can be written as xi(w1,..., wm). Then we have the following diagram of dependencies where each arrow means that the variable at the tail of the arrow controls the variable at the head of the arrow.

If we want to take the partial derivative , we should look at all the possible paths from wj to f, which represent all the ways in which wj indirectly influences f. For a given path, say wj → xi → f, a change Δwj in wj produces a change in xi which is magnified by a factor of

. This change in xi in turn produces a change in f which is magnified by a factor of

. For this path, a change in wj gets magnified by a net factor of

to produce a change

in f.

Then we add up the changes from all the possible combinations of paths to get the total change in f:

Dividing by Δwj gives you the change in f relative to the change in wj, the limit of which is the partial derivative :

Because we found by adding up all the changes in f caused by a change in wj,

is sometimes called the total derivative of f with respect to wj.

Example

Given:

,

Find .